Edge detection

As we know a digitized image is represented in a computer as an array of two-dimensional pixels. A pixel is the smallest unit inside an image that can be uniquely captured using the camera. The number of pixels in an image depends on the resolution capacity of the camera. In a grayscale image, each pixel corresponds to the intensity level in that particular pixel and its value ranges from zero to 255. In color images, each pixel has red, green, and blue color levels with values ranging from zero to 255. Theoretically, a mixture of these three colors can generate any visually identifiable colors of human perception.

In edge detection, our aim is to identify portions of the image where information is densely populated. This means we are interested in identifying those pixels which form edges in an image. Edges in the image are identified by comparing them with the pixel intensity of neighboring pixels. Larger the difference or variation in brightness of pixel values, the larger the likelihood that it is an edge pixel. Therefore our interest is to identify those pixels which significantly differ from their neighboring pixels.

The application of calculus comes into play here. We know that calculus is the study of change just like geometry is the study of shapes. Assuming that the pixel values in an image form a discrete function, our aim is to find the points in the discrete function whose slope is higher. Since an image is a 2-D function, operators describing edges can be expressed by using partial differential equations. The points that lie on an edge can be detected by detecting the local maxima or minima of the first derivative or by detecting zero-crossing of the second derivative. The idea is described in figure (1) for 1-D analysis. However, since noise in an image often forms pseudo-edges we have to pre-process the image. Therefore edge detection can be described in four steps as below.

(a) Smoothing – to reduce noise without destroying true edges

(b) Enhancement – improve the quality of the edges (eg. by improving sharpness)

(c) Detection: find the edge pixels

(d) Localization: find the exact location of the edge. (eg. linking edges together)

The application of calculus comes into play here. We know that calculus is the study of change just like geometry is the study of shapes. Assuming that the pixel values in an image form a discrete function, our aim is to find the points in the discrete function whose slope is higher. Since an image is a 2-D function, operators describing edges can be expressed by using partial differential equations. The points that lie on an edge can be detected by detecting the local maxima or minima of the first derivative or by detecting zero-crossing of the second derivative. The idea is described in figure (1) for 1-D analysis. However, since noise in an image often forms pseudo-edges we have to pre-process the image. Therefore edge detection can be described in four steps as below.

(a) Smoothing – to reduce noise without destroying true edges

(b) Enhancement – improve the quality of the edges (eg. by improving sharpness)

(c) Detection: find the edge pixels

(d) Localization: find the exact location of the edge. (eg. linking edges together)

Figure (1) - Idea of edge detection in 1-D intensity signal function. Changes in edge can be detected using local maxima or minima of the first derivative or using the zero-crossing in the second derivative. (Source here)

The derivative of a 1-D signal can be computed by approximating the derivative by finite differences.

Therefore

Mask for 1D signal: [-1 0 1] (centered about x)

Computing the second derivative gives,

Mask for 1-D signal using second derivative: [1 -2 1] (centered about x)

Based on the 1-D theoretical analysis, the same idea can be extended to two-dimensional pixel planes, and one such extension is known as the Sobel operator. The Sobel operator performs an accurate approximation to calculate the derivative of a two-dimensional image by computing the spatial gradient measurement of an image. Sobel detector uses a pair of 3 x 3 convolution masks, one for x-direction and the other for y-direction. Sobel operator for a two-dimensional image is as follows and it slides over the image.

Figure (2) – Sobel operator for edge detection in images.

The magnitude of the gradient is calculated using the resultant formula:

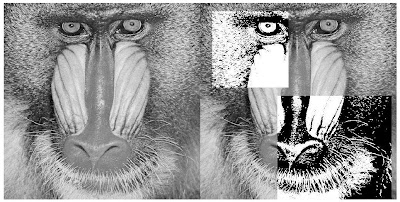

Some of the results of applying the Sobel operator in grayscale images and color images are shown below. In color images, we compute the intensity and use it for edge detection.

Intensity I = (red + green + blue) / 3

After computing the edges, thresholding is applied to highlight edges that are highly likely. Again we can highlight those edges which are in a particular direction by thresholding edge intensity image using computed direction information.

Figure (4) Original Image, Edge image, Thresholded edge image and direction thresholded edge image (clockwise). ROI (x,y,sx,sy) = (10,10,450,620), threshold value = 80 and direction threshold value = 80 to 90 degrees.

Figure (5) Original Image, Edge image, Thresholded edge image and direction thresholded edge image. The ROI (x,y,sx,sy) = (10,10,450,620), threshold value = 80 and direction threshold value = 70 +- 10 degrees.

Figure (6) Original Image, Edge image, Thresholded edge image and direction thresholded edge image. The ROI (x,y,sx,sy) = (10,10,550,550), threshold value = 80 and direction threshold value = 45 +- 10 degrees.

Circle detection using Hough transform

Circle detection in image processing is done using Hough transform. Hough transform is a feature extraction technique to find imperfect instances of objects within a certain class or shape. This is done by parameterization and then voting the parameter space to find local maxima. First, we compute the edges of the image using edge detection. Then we threshold the edge image so that we can find significant edges. Now we have to find those edges which form a circle. Note that after edge detection circumference of the circles might not be connected clearly. So we apply Hough transform.

A circle can be described completely with three pieces of information: the center (a, b) and the radius R.

x = a + Rcosθ

y = b + Rsinθ

y = b + Rsinθ

When the θ varies from 0 to 360, a complete circle of radius R is generated. We note that for a circle the parameters (a, b, R) are constant. In the parameter space, we plot the coordinates with (a, b) boundary equivalent to the set of all possible centers in a given image where θ ranges from 0 to 360. For each edge point that correspond to (x, y, R) of a particular circle in real space we draw the sphere in the corresponding (a, b, R) parameter space. If we are looking for circles of a particular radius R then we have a 2D parameter space.

Figure (7) – Each point in the geometric space (left) generates a circle in parameter space (right). The circles in the parameter space intersect at (a,b) the center of the circle in geometric space. (source)

Rearranging the equations we get

a = x1 – Rcosθ

b = y1 – Rsinθ

After plotting the circles in parameter space, we will note that the points at which circles in parameter space intersect correspond to the center of the circle in real space. To find circles that are more likely true we compute intersections that have a high density. This is done by sampling the parameter space with a suitable bin size. Once we compute the local maxima we highlight the circle in the original space. Some results for both greyscale and color images are shown below.

Figure (8) Original Image, Edge image, Thresholded edge image, PSpace image and Resulted circle. The ROI (x,y,sx,sy) = (10,10,300,300), threshold value = 80 and radius = 33 px.

Figure (10) Original Image, Edge image, Thresholded edge image, PSpace image and Resulted circle. The ROIs (x,y,sx,sy) = (110 10 200 200)(110 280 200 200), threshold value = 80 and radius = 90 px.

Figure (11) Original Image, Edge image, Thresholded edge image, PSpace image and Resulted circles. The ROIs (x,y,sx,sy) = (180 220 80 80) (180 320 80 80), threshold value = 80 and radius = 11 px.

I have included sample code in c++ for edge detection and Hough transform. Code for classes ROI, Images are excluded.