I just wanted to write something regarding my project work at the college. I always thought of creating something interesting. This idea came up randomly, though I can't recall how. It was to create a price finder website on the internet. Again it's formal writing!

In this age of online shopping customers often find the same product being sold at different prices on shopping websites. Customers usually want to buy the right product at minimum cost. It would be useful for them if they were to have a search engine that will give them the best website to buy from. We make use of web crawlers and screen scrapers to get the price of products on shopping websites and then store it in a database with indexing. We aim to develop a heuristic Search engine that would accept the name(s) of the product as input and return ideal website links as output.

Concept description

First I developed a web crawler module that would download the entire pages from shopping websites we need. As a prototype, I downloaded 5 major book shopping websites in India (books.rediff.com, flipkart.com,eshop.talash.com/books, gobookshoping.com, and nbcindia.com).

In the next module, I needed to create a user interface using JSP in which the user would enter the name of the product(s) he would like to purchase. The request is processed and finally, the desired results are displayed to the customer using a searcher and a price finder. The result would display website descriptions of those selling the requested product name. The user can then go to the link directly to purchase.

A simple diagram demonstrating the concept

Design of web crawler module

The web crawler I wrote works by following hyperlinks on the shopping website, excluding external hyperlinks which point to other domains. The links are extracted from the HTML content of each page by using regular expressions and Jericho HTML parser. The process is repeated until all pages in the preferred shopping website are downloaded.

The downloaded webpage is in HTML format. In the next step, we have to extract text from it. For that, I used an HTML parser library called Jericho. The downloaded pages are stored in a database along with address, text, and HTML content. I used the latest version of an open-source database called H2 for this purpose. Next, we have to index the text column in the database in order to make searching operations faster. For this, I used open-source Lucene text indexer which I found to be efficient. Finally, the name of the downloaded shopping website is written in a text file for later use by the searcher.

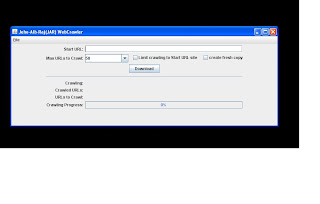

I was able to create a web crawler user interface using Java. It had options to specify the maximum number of pages to download with a progress bar indicating the status of the crawling process. Also, it had options to pause and resume the download. Many instances of this web crawler application can be initiated so that downloading websites simultaneously is possible to make use of the network bandwidth in an optimal way. It would run on any machine with a Java runtime environment installed.

Design of the user End module

The user end module is similar to the Google search page. It ran on an Apache Tomcat server. The user query is translated into a database search query by using a query parser.

Design of Query parser sub-module

If the quantity is specified in the query then it is saved and will be used to calculate the total price at a later stage. In the above example ‘wings of fire’ AND ‘Abdul Kalam' means look for pages that contain both the terms “wings of fire” and “Abdul Kalam”.

Design of Searcher sub-module

The job of the searcher module is to search for the parsed user query. Using multi-threading the translated user query is searched on each of the databases concurrently.

Design of price finder sub-module

This module works inside the searcher module. The job of this module is to find the price of the product. Utilizing the result set of searcher it fetches those pages which contain the user query. For each of these pages, a carefully selected logic is used to get the price of the specified product. Finally, the product name, price of the product, and other details are cached for future queries.

The logic used to find the price

The assumption is that most shopping websites would display products in the below format.

Name of the product…..some details……Price…reduction rates…Reduced price.

I made use of regular expressions to extract the price by looking for “product name” and then looking for “currency”.

One example is shown below found at books.rediff.com

Everyday Eating For Babies And Children

|

|||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||

|

off

|

The user enters the name of a product or a list of products he wants to purchase. An additional option was provided to view the cached copy excluding images so that in case the original web server is down user can still see the content.